In March of this year, Intel acquired Mobileye, the largest chip company in the field of autonomous driving, for $15 billion. The news of the acquisition is instantly screened, but it is reasonable to think about it. Since last year, Intel has made up its mind to make a big push into artificial intelligence, and has started the buy-and-buy model. In 2016, it has successively acquired Nervana and Movidius, two companies that specialize in server-side and mobile-side machine learning systems. At present, the most popular field of artificial intelligence, in addition to the server-side AI service (Nervana), the mobile side provides a lightweight low-latency AI application (Movidius), is the most automatic driving, so Intel next to Nervana and Movidius It is reasonable to choose Mobileye for the acquisition target.

From the perspective of Mobileye, its cooperation with Intel has been in existence for some time. Since the end of cooperation with Tesla, it has joined the autopilot Intel camp with one heart and one mind, and formed an autopilot alliance with Intel and BMW to fight against the trend. Nvidia and Tesla. At present, the alliance is still in the honeymoon period, Intel and Mobileye have become close to each other and are expected to use more actions in the future.

On the other hand, Qualcomm also announced the acquisition of the automotive electronics giant NXP last year. The acquisition was huge, attracting the attention of almost everyone in the semiconductor industry, and also demonstrated Qualcomm's determination to go out of the mobile phone industry. Qualcomm and NXP's business overlap is not much, after the acquisition is more complementary business, NXP's automotive electronics business will become an important part of Qualcomm's future map.

Both Intel and Qualcomm are targeting the future of automotive electronics. Obviously, the biggest potential for automotive electronics in the future is driverless, but Intel and Qualcomm have chosen two distinct roads to unmanned technology. Below is a detailed analysis of the technical blueprints of the two companies.

Intel: Be a robot to drive you

Intel was born out of a microprocessor and became the world's largest semiconductor company by the popularity of computers in the last century. It is also because of the success in the computer industry, and wants to do the mobile terminal business in the computer industry before, the result of encountering Waterloo, missed the mobile Internet. The next vent is artificial intelligence, and Intel certainly doesn't want to miss it anymore, so it has repeatedly invested heavily.

Intel and artificial intelligence have always been very close. Artificial intelligence has always been a branch of the computer field, and the origin of Intel and computers is not necessary to say, so Intel's artificial intelligence is not a transformation, but can only be said to slightly adjust the key layout of the computer business. Intel's automatic driving is also based on artificial intelligence, so it is necessary to analyze this from how artificial intelligence implements automatic driving.

Since the birth of the computer, artificial intelligence has become a question that computer scientists have been thinking about. Is it possible to use computers to realize human thinking ability? Turing, the ancestor of computer science, thought about this problem and proposed the famous "Turing principle" (that is, whether humans can judge whether it is a person or a computer) to help determine whether the computer actually realized artificial intelligence. Later, in the middle of the last century, Minsky and others made outstanding contributions to the development of artificial intelligence, but then the development of artificial intelligence has fallen into a low tide. Until a decade ago, deep learning based on deep neural networks re-emerged, and the eyes of the world once again focused on the topic of artificial intelligence. The neural network is a bionic artificial intelligence algorithm. The iconic event of its rise is the birth of AlexNet in 2012, which has greatly improved the accuracy of object recognition on the ImageNet dataset. After that, the neural network became deeper and deeper, from more than a dozen layers to more than one hundred layers of Microsoft ResNet, and even more than a thousand deep learning networks appeared recently.

Intel uses artificial intelligence as a technical route for autonomous driving, with the ultimate goal of creating an artificial intelligence driving system. The system can be understood as the design and manufacture of a robot that knows how to use sensors to sense information around the car and make decisions based on artificial intelligence algorithms to achieve the same level of driving as humans. From a technical point of view, Intel is responsible for the brain of this robot (the computing part, Intel is the processor industry leader, its chip can provide enough powerful computing power), and Mobileye is responsible for the robot's eye (sensor signal processing chip, sensor raw data volume) Very large, requires a dedicated high-efficiency processing chip to be pre-processed and then sent to the back-end general-purpose processor for automatic driving decisions).

Intel's autopilot system requires a variety of sensors, including cameras, millimeter-wave radar, laser radar, ultrasonic sensors, etc. Intel's purchase of Mobileye is to add a pair of keen eyes to the autopilot system. Efficient sensor fusion. Both companies in the algorithm have accumulated, and it is expected that there will be better algorithms after the integration. In terms of data, it is necessary to provide training in deep learning algorithms by major automakers working with Intel.

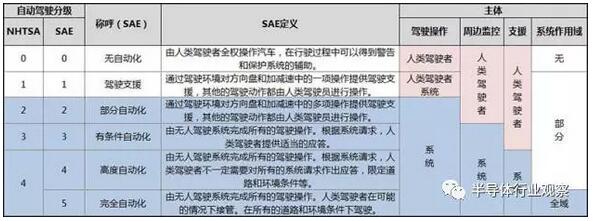

Intel's choice of automatic driving using neural network artificial intelligence is a bionics design. The object of its effect is human itself. It is nothing more than replacing the human eye with a sensor and replacing the human brain with a deep learning algorithm. From another perspective, the limits of humanity basically determine the limits of this automatic driving system. In the automatic driving grading, the system supports the third level (conditional automation) without any problems, because the automatic driving system does not completely take over the car, this time is equivalent to the real driver is the driving school coach sitting on the passenger car, and automatically The driving system is a new driver (although this new driver is better than the real old driver most of the time!), once the autopilot has something wrong, the old driver immediately intervenes to avoid the accident.

Open the smart live streaming screen,use our remote control,choose your connect method,wired and wireless,if you are using a android mobile,chose android wireless projection menu,find the screen projection tools in your mobile,and then search the nearby device,you will find an ID and a pin number,click connect.wired connect,use a usb line to connect the screen,you need to download an screen projection apps called flybytemirror,following the instructions,finish the downloading and install,after all these being done,the connection between your mobile and screen finishes.an iPhone can directly connect the screen with its internal tools airplay.after done all these,start your live streaming,you just to pay attentions on the screen,put your mobile away,enjoy your live streaming.

Lcd Touch Screen For Live Broadcast,Mobile Live Broadcast Touch Screen,Lcd Touch Screen Live Streaming Display,Smart Digital Live Streaming Screen

Jumei Video(Shenzhen)Co.,Ltd , https://www.jmsxdisplay.com