In order to create an AI robot in the game, game developers usually write a series of codes manually according to the rules. In most cases, this method can make robots imitate people to be extremely realistic, but they are essentially different from people. Most players can tell at a glance whether this teammate/enemy is a real person. Or AI.

So, in addition to manually writing rules, or writing some hard-coded, do we have other ways to make the game AI more realistic? If we want AI to learn to play games by observing human behavior, what should we do?

To explore these issues, we first need a game that provides a lot of game data, such as "FIFA." So we will use the latest version of FIFA 18 in the FIFA series as an example to train an end-to-end deep learning robot with a large number of game videos that record player behavior and decisions. One thing to note is that we don't write hard code for a single game rule.

Github Address: github.com/ChintanTrivedi/DeepGamingAI_FIFA.git

Game play mechanism

Because we cannot access the internal code of the game, building the basic game mechanics for the robot is a top priority. This is actually an advantage, and one of the prerequisites for this project is that it does not rely on any internal game information. Therefore, our robot will only see a simple screenshot of the game window, what the player sees, what it sees. It will output the desired action by processing the visual information, and use the controller simulator to communicate the instructions to the game. After that, keep refreshing the image and repeat the cycle.

As shown in the above figure, we have now established a framework for providing input to the robot and outputting instructions for manipulating the game character. At this time we came to an interesting part: learning game intelligence. It can be divided into two steps: (1) understanding the screenshot with a convolutional neural network; (2) understanding the image content and making decisions using a long-term and short-term memory network.

Step 1: Train convolutional neural networks (CNN)

CNN is known for its ability to accurately detect objects in images. Based on it, we are complemented by high-performance GPUs and smarter neural network architectures, and we can get a CNN model that can run in real time.

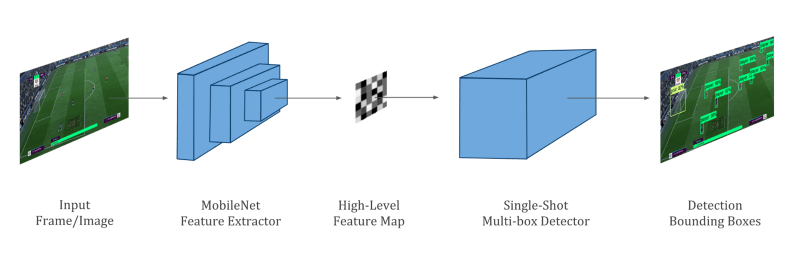

In order for our robot to understand the input image, we can use this lightweight, high-speed CNN called MobileNet. The network can capture feature maps from the graph on the premise of a high degree of comprehension of the screenshots, such as the position of the player or player's attention on the screen. After that, the object detection model SSD is used to detect players, balls and goals on the court.

Step 2: Training Long Term Short Term Memory Network (LSTM)

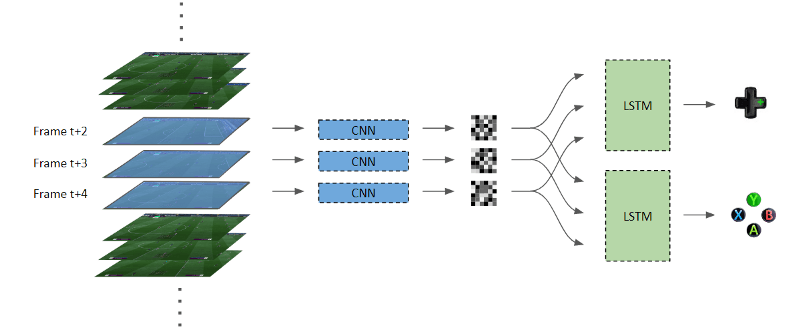

Now that we have understood the image, the robot has the ability to make decisions. But we don't want it only to see the check box one by one and then take action. We hope it will see a short sequence of images. This is why LSTM was introduced - they can simulate time series in video data. We use successive frames as time steps and use the CNN model to extract feature maps from each frame and feed these simultaneously into two LSTM networks.

The first LSTM is responsible for understanding what the player needs to do. Therefore, this is a multi-class classification model. The input obtained by the second LSTM is the same as the first one, but it must decide what action to take to achieve cross-pass, straight plug, short pass, and shot: Another multi-class classification model. We converted the output of these two classification problems into buttons to achieve the purpose of controlling the game.

Evaluate the performance of the robot

In addition to letting AI robots go directly to the game, we can't find any way to test performance. After 400 minutes of training, the robot learned to approach the opponent's goal, pass forward and shoot when the goal was found. In the novice mode of FIFA 18, our robot scored 4 goals in 6 regular games, one more than Paul Pogba in 17/18 season.

(Accent accent is not clear, please practice listening)

summary

This is just a way to create a robot. In terms of results, it is still very positive. There were also two problems in the training. One was that the robot couldn't distinguish between the enemy and the other, and the other was that it kicked the ball back halfway and started to run back. Regarding the first point, the author's solution is to use screenshots and button information as training data for supervised learning, and always use the same home team and different visiting teams to play. Over time, the robot can tell which opponents it is and what it is. Regarding the second point, one netizen proposed a simpler solution that divides the stadium into two parts with the midline as the boundary, flipping the screenshots on one side and adjusting the orientation to achieve a multiplier effect.

With limited training, the robot has mastered the basic game rules: move toward the goal and kick the ball into the net. If after a period of training, I believe it can be more human than the existing game AI, and it is easier to create. In addition, if we can extend this experiment and train it with real game data, we believe that the robot's behavior can be more natural and realistic. So maybe game developers can change the way they do AI, are you right, EA?

Wireless Router Module,Arm Core Module,5G Module,Router Core Board

Shenzhen MovingComm Technology Co., Ltd. , https://www.movingcommiot.com