Since machine learning is started from what machine learning, machine learning is a relatively general concept.

At first glance, it will feel like machine learning and artificial intelligence, and data mining is similar to what they say. The actual relationship between them can be summarized as:

Machine learning is a sub-direction of artificial intelligence

Machine learning is an implementation of data mining

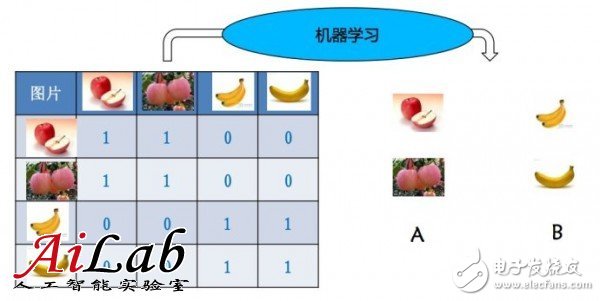

To give a simple example, for some apples and bananas, people distinguish between features and remember these characteristics. The next time you come to a new apple or banana, you can judge whether it is a banana or an apple.

As any algorithm or mathematical problem with a program, some input, some output is always needed

For machine learning, the input is a vector of features, or a matrix

If there is only one feature, the input is a vector. Of course, the vector is a weakened matrix concept.

• The current core technology of machine learning is matrix-based optimization technology

Input: Matrix - Information to learn

Output: Models - Summary of the Rules

Therefore, the current mainstream machine learning technology can be formalized as

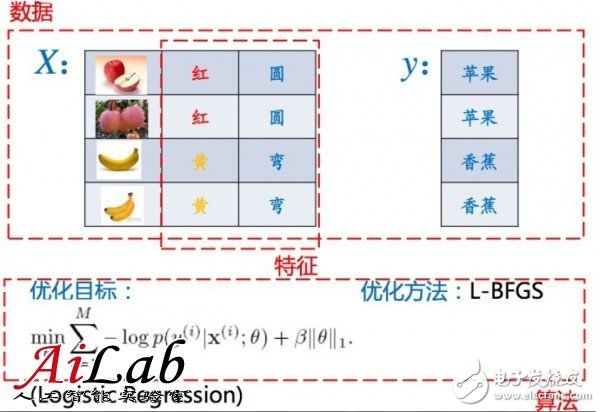

• Input: feature matrix X, dimension vector y

X is a feature matrix and does not include "sample name" and "sample annotation"

y is the label vector, the "sample label" column

• Output: model vector w

• Expectation: X·w as close as possible to y

A variety of optimization algorithms can solve w, the difference lies in how to define "as close as possible"

For example, to calculate the CTR of an ad

In this example

The goal is to guess whether the target ad will be clicked based on the known auc feature.

In the example, there are only two features, the query keyword and “whether it is redâ€, and the judgment result is whether to click

Well, now there are problems and input and output, specifically solve this problem, you can use the relevant algorithm

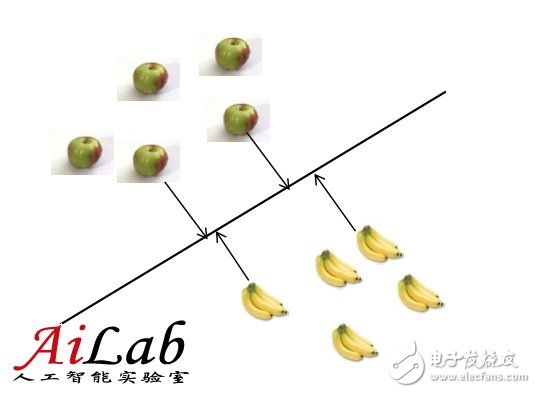

The preceding definition has such an implicit key point: X w is as close as possible to y

How to define this "approach", the idea is different, the algorithm is different

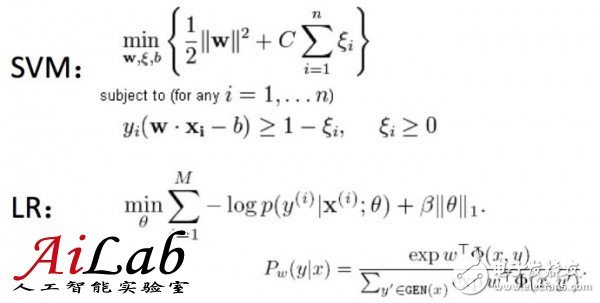

For example, for LR (LogisTIc Regression) and SVM (Support Vector Machine) are different, as an example, you can understand as follows

The mathematical form means that

The specific algorithm details are not the focus of the discussion here. In these two algorithms, the definition of "close" is different.

Academically speaking, it is "differing optimization goals."

Whether they are the same or not, we can at least choose one optimization goal

With the optimization goal, the next thing to do is how to solve this optimization goal.

The general idea now used is mainly

L-BFGS, CDN, SGD these

Putting these above elements together, there is a complete machine learning problem

It needs to pay attention to three aspects of optimization:

Algorithm, data, features

Let's separate it below

The algorithm is the optimization goal + optimization algorithm

From the perspective of optimization goals, industry often does not have the resources or strength to study new algorithms. Most of them use existing algorithms or extend existing algorithms.

From the perspective of the optimization algorithm, there are mainly three points:

Smaller computational cost

Faster convergence

Better parallelism

These three points are also better understood. For an engineering problem, the cost of calculation is small, and the machine can do other things. With faster convergence, you can better put the results into use. With better parallelism, you can use existing ones. Big data framework to solve problems

The training data is the data that is as far as possible and actually distributed and the data is as full as possible.

In the machine learning algorithm, since the expected total error is often the smallest, it is very likely that the category with a small amount of data is discriminating incorrectly.

If the difference between the sample data and the actual data is large, the results for the actual algorithm are generally not good

Full data, this is a good explanation, the more full the data, the more full training, it is like the more exercises before the exam, the better the general effect

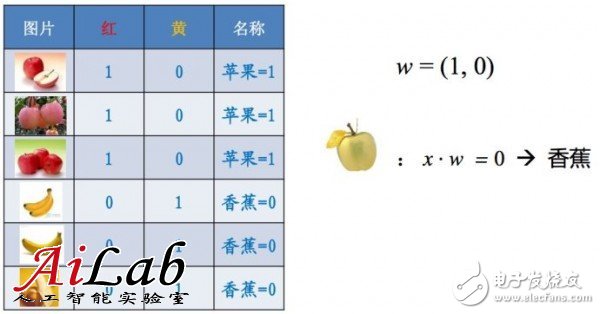

The feature is to include as much information as possible to identify the object

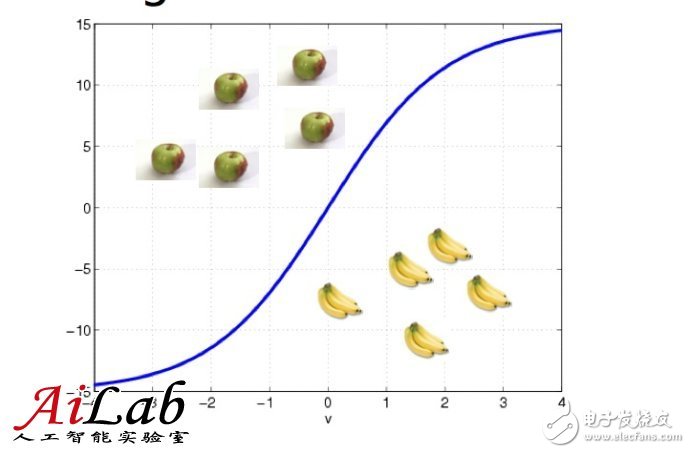

A simple example, if there is only one feature, the judgment of the fruit is often biased, it will cause the following results

As the characteristics increase, training will be accurate

Often for an engineering problem, characteristics are the most determined aspects

An algorithm optimization may only optimize the effect of 2~3%, but the feature may be 50~60%. The energy of an engineering project is often drawn on the feature selection, such as Baidu CTR prediction. Characteristic data is currently 10 billion class.

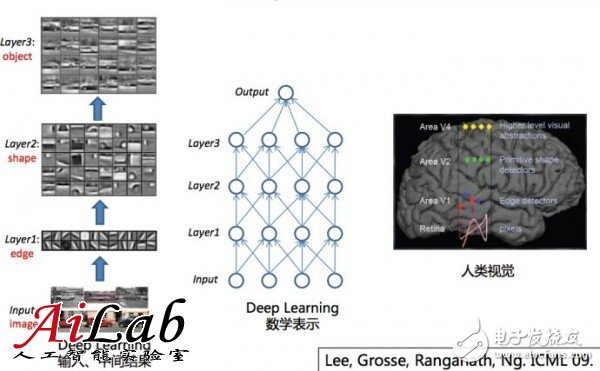

Finally, it's something about deep learning.

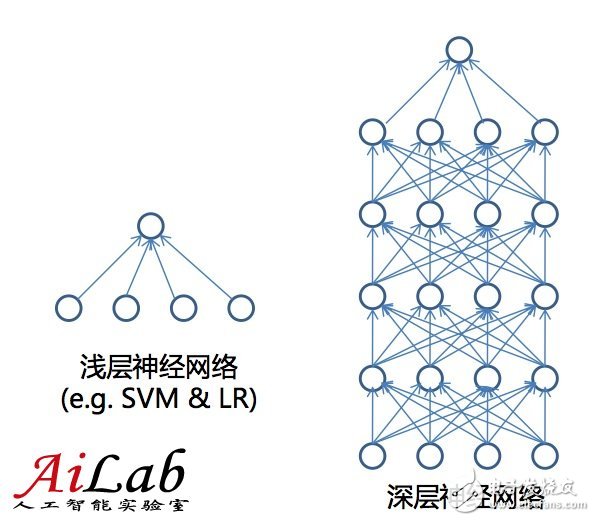

The biggest difference between deep learning and current machine learning algorithms (Shallow Learning) lies in the number of feature layers.

The current machine learning algorithm is mainly a layer, which is the direct inference from the feature is the result;

The result of Deep Learning's and human's processing problem is more similar. It is the result of inferring some intermediate layers from the characteristics, and inferring the final result;

For example, vision consists of a number of points, which in turn determine some edges, and then determine some shapes. Then it is the overall object.

Previously extensive use of Shallow learning was mainly due to the fact that Shallow Learning has very good mathematical characteristics

Solution space is a convex function

Convex function has a large number of solutions

Convex function optimization can refer to

**Convex OpTImizaTIon

**

**~boyd/cvxbook/

Deep Learning is easy to find the local optimal solution instead of the global optimal solution.

Deep Learning solution can refer to

G. Hinton et al., A Fast Learning Algorithm for Deep Belief Nets. Neural ComputaTIon, 2006.

Also see Andrew NG's most recent course

Here is the latest Chinese translation http://deeplearning.stanford.edu/wiki/index.php/UFLDL Tutorial

3.2V105Ah Lithium Ion Battery,105Ah 3.2V Phosphate Battery Cell,3.2V105Ah Deep Cycle Solar Battery,3.2V 105Ah Lifepo4 Forklift Battery

Jiangsu Zhitai New Energy Technology Co.,Ltd , https://www.zhitainewenergy.com